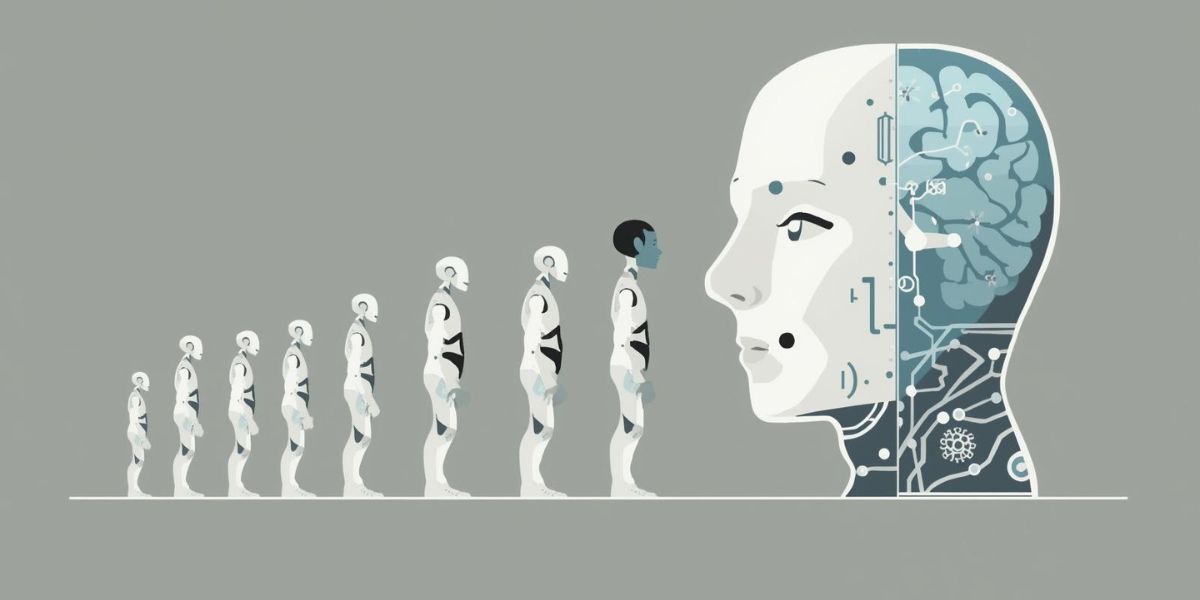

The journey of AI development has been marked by seismic shifts and just as many frequent over-predictions. Every few years, we’re told that autonomy is just around the corner.

Yet most current models remain far from the kind of deep reasoning, reliability, and real-world adaptability required for full autonomy or lasting economic transformation.

We’ve built systems that perform, not systems that understand.

The Hype Cycle Never Ends

AI has always lived in a state of duality: breathtaking progress paired with inflated expectations.

Every breakthrough, from GPT to robotics, from self-driving cars to copilots, sparks the same narrative: “This time, it’s different.”

And yet, the same constraints reappear - fragile reasoning, brittle adaptation, poor generalization outside training data

The problem isn’t that AI isn’t advancing; it’s that progress is being mistaken for arrival.

Intelligence Without Reliability

True autonomy requires not just intelligence, but reliability under uncertainty.

A human pilot can navigate unexpected weather, interpret ambiguous signals, and make judgment calls that balance risk and intuition.

AI, by contrast, operates within the boundaries of its data distribution, confident even when wrong, fluent even when lost.

Reasoning without grounding is imitation. Confidence without verification is noise.

Until AI systems can reason under pressure and in context, autonomy will remain a simulation, not a capability.

Economic Impact: Promised vs. Proven

Predictions about AI’s economic transformation are often exponential in imagination, linear in reality. Yes, AI can automate text, images, and certain workflows. But automation isn’t transformation.

Real economic value comes from trust, reliability, and integration, not novelty.

A chatbot that fails one out of twenty times is not a tool; it’s a liability. A model that hallucinates financial advice isn’t a disruption; it’s risk.

The future of AI in the economy depends less on scaling intelligence and more on earning reliability.

The Missing Ingredient: Grounded Reasoning

What’s still missing is the ability to connect abstract reasoning with physical and causal understanding.

Humans reason through embodiment, we sense, we test, we fail, we learn. AI does none of this. It simulates reasoning patterns but never experiences the consequences of being wrong.

Until systems can interact with the world, perceive feedback, and build causal intuition, they’ll remain spectators of reality, not participants in it.

From Over-Prediction to Real Progress

The next phase of AI must move beyond prediction and performance metrics.

It must focus on stability, truthfulness, and transferable reasoning, qualities that allow models to understand before they act.

AI doesn’t need to be more magical; it needs to be more dependable.

Only then can it become truly autonomous and truly transformative.